Center for Government Interoperability

Articles

How to run an IT department

Efficient delivery of IT services

We have seen from the Enterprise Focused Development (EFD) article that the way to build software systems is to have a data modeler engage with the business customer to continually refactor the data model until client requirements can be met through clean joins in a third normal form data base.

The number one priority of any chief information officer is to create an environment that scales the EFD concept out to the rest of the organization.

- Remove the obstacles separating data modelers and in-house developers from the clients. Understand what your critical innovation gateways are and make sure they are not blocked - see data architect duties

- Use EFD for all software development

- Whenever there is any change anywhere in the organization, use the Opportunities Check List to find improvement opportunities

- Continually ask stakeholders to identify their priorities and study them. Priorities are different from a list of requirements. It puts you in touch with their reality. Use this insight to create an IT vision with the data modelers.

- Retain ownership of your core mission data model. Never use an outside vendor's proprietary data model. The data model is the mechanism for delivering IT services to clients. Once access to change the data model is handcuffed, there is no agility or way to easily add new services. Your data modeler might as move to another organization. All vendor incentive to innovate is gone and client is locked forever into rigid requirements.

- Open source - the old question used to be "build it or buy it?". Today, the answer is build it and make it open source so that other government organizations can share it and it never has to be bought or built again.

- Fill top management positions with experienced data modelers and coders. It is a common misconception that IT managers don’t have to be experienced coders because running an IT shop is like driving a car, i.e., you don’t have to know exactly how every piece of auto technology works to drive the car. Actually, IT managers aren’t driving the car. They are building the car for the business side who is driving the car. No one can manage something if they don't understand it; the best they can do is stay even. A non-coder manager cannot create a great vision for the organization simply because there needs to be an experienced person that understands how the thousands of tiny conditionals and loops that make up software can be marshalled to create innovative solutions. At best, a non-coder/data-modeler manager can only copy what exists, but unfortunately, we live in the wild west era of computing where solutions haven't been invented yet. This is why today's great CEOs are coders: Bill Gates, Steve Wozniak, Elon Musk, Jeff Bezos, Sergey Brin. Would they put a non-programmer in charge of their IT department?

CIO’s top priority should be the data. Finance/server-admin/network and other skills are not specific to org’s mission, so it's not effective to have a CIO whose only experience is for example, network design. The CIO needs to be a data expert because only data is specific to clients.

To successfully run an IT department, create an environment where data modelers are given authority to engage with clients, understand their priorities, and deliver IT services that exceed client expectations.

TopData table management

Managing the future of government business systems

The purpose of this article is to focus federal and state government attention to its most important business IT task.

There is a common thread in a vast number of business problems that most problem solvers cannot see. It is government's largest business problem: incomplete and incorrect table design.

Tables model the business. They are the heart and soul of how IT enables government to fulfill its mission. Tables are the closest approximation to the organization's processes. When tables are not designed correctly, IT is out of sync with government's mission.

This problem manifests itself daily in constantly changing disguises, keeping perplexed managers busy fixing seemingly different issues. The details of how this happens is that badly designed tables, including tables failing to connect with their keys in data islands, force layers of bad programming code to form around them for decades, creating a never ending ripple effect extending to other computer systems where clients see the problem camouflaged as a lack of functionality in many areas their business processes.

Here's a common business example, let's say your spouse signed up for the household electricity account, and the monthly statements only show your spouse's name because there was no data field for additional names. If you needed to demonstrate residency by showing the electric bill, it would be impossible. A one-to-many field was required for this but the programmer only created a single field for account owner. Every phone call that made to the electric company requires an explanation that your home's electric bill is not in your name.

Another example is the frustratingly slow medical care that soldiers serving in the 2003 Iraq war received at Walter Reed Army Medical Hospital. The medical care process could have been streamlined by improved table design allowing for better data sharing between the Defense Department and Veteran Affairs. [GA0 2007]

The importance of integrating government data cannot be overestimated. The cost of not having integration affects every single government organization in terms of immense additional expenses and loss of services, and can spell the difference between life and death. While government generally understands that it should move towards integration, a striking example of government disintegrating its own data occurred around 2003 when the Federal Emergency Management Agency (FEMA) took previously efficiently functioning integrated government disaster management components and contracted them out to private sector companies. The private companies did not have integration in place to communicate seamlessly as the government's system, and disaster responses during Hurricane Katrina in 2005 failed to work. Thousands of people died, partly because of the failed cooperation between the private companies. Government must learn that if it contracts out work, it must remain in charge of integration. [Leonard 2007]

Besides the small group of people in the data modeling field, most people cannot recognize the commonality in the three disparate problems above. Most business IT problems arise from this problem, severely limiting IT alignment to government's mission.

Bringing enterprise data to efficiency perfection

The solution is for each government agency to create a position to function as a data architect defined in OMB's Federal Enterprise Architecture framework, focused exclusively on keeping data modeled correctly. Modeling the data correctly must be broadly defined to include removing redundancy across all units of the organization such as envisioned by the business reference model and integrating related data with outside government organizations via the data reference model. Enterprise Architecture is not solely data normalization on steroids, but that is the main idea.

The data architect's role goes to the heart of government IT problem resolution by focusing the strongest problem solver on the root cause of the biggest problem.

Much faster improvement to government would come from the creation of a position similar to a data architect but with the authority of the CEO within each organization, and also for the state or federal government as a whole. Giving data designers that much authority to re-engineer business processes enterprise wide would avoid the slow road of voluntary cooperation within and between government agencies. This topic entitled Chief of Enterprise Integration, is discussed here; however such big organizational change will not happen soon. Here is a zzzroadmap to resolving this problem with tools and people that we have now.

Next->> Recommendations for Implementing Enterprise Data Architecture

REFERENCES

GAO report number GAO-08-207T entitled 'Information Technology: VA and DOD Continue to Expand Sharing of Medical Information, but Still Lack Comprehensive Electronic Medical Records' released on October 24, 2007

Leonard, Herman B. and Howitt, Arnold M. (2006) "Katrina as Prelude:

Preparing for and Responding to Katrina-Class Disturbances in the

United States -- Testimony to U.S. Senate Committee, March 8, 2006,"

Journal of Homeland Security and Emergency Management: Vol. 3 : Iss.

2, Article 5.

Available at: http://www.bepress.com/jhsem/vol3/iss2/5

Government's strongest productivity machine

Government's Solution Machine

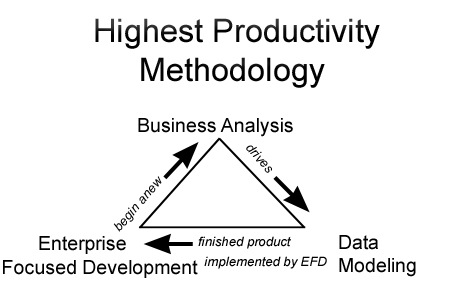

The heart of the most productive solution machine is a three step repeating process.

- Business requirements gathered from many sources by an enterprise integrator drives:

- Prioritized enterprise-mindful table modeling to align IT quickly to business intelligence identified requirements. Programming code and finished product are implemented by:

- Enterprise Focused Development, which uses small development teams and creates high quality systems integrated with Enterprise Architecture.

The above process begins at the top again and the whole organization's data is continuously and nimbly refocused. I've named the frequent refocusing of IT to business requirements super alignment to government's mission.

Every information executive should ensure that the above process is operational in their organization. It sets up the rest of the IT infrastructure to successfully support government's mission.

Once the above system reaches its cadence, clients will notice an organization-wide change where new services are rapidly delivered.

TopBudget solutions: Reducing government cost through Enterprise Focused Development

IT Budget Solutions

Reducing Government Cost Through

Enterprise Focused Development

There is significant and easy-to-implement cost reduction through Enterprise Focused Development, EFD.

The small size of highly productive teams makes projects very inexpensive.

It frees the organization from financial risks right from the outset of project development and produces the highest quality systems of any methodology.

A world leader in constructive analysis of project failures, The Standish Group, published a study showing that large IT projects fail or are challenged more often than succeed. [Standish 2006] Waterfall methodology was listed as one of the main reasons for project failure. Enterprise Focused Methodology addresses the main reasons for project failure and will save government substantial sums of money.

These very large savings should interest government.

A solid example, because of the large number competitors and level playing field, is the personnel system created by Enterprise Focused Development. Enterprise Focused Development was at least ten times less expensive and provided much higher quality than any of its competitors; a 90% savings.

To roughly estimate yearly savings to government, let's conservatively assume that instead of reducing development costs by 90% compared with current methods, it will only reduce development costs by 2%. For a $3 billion dollar state development budget, Enterprise Focused Development will save taxpayers $60 million dollars annually plus give consumers better service.

Additional savings will result from projects not failing that would have otherwise failed by not using Enterprise Focused Development. These constitute at least hundreds of thousands of dollars.

Costs are reduced by savings in time and number of staff required to manage the project. Enterprise Focused Development reduces staff costs by eliminating risk and requirements management work that is optimized by the iteration process. Each quick refocusing of the entire project through Enterprise Focused Development saves many hours of work and prevents costly errors. Savings multiply as iterations progress. In traditional methodologies, problems become evident during the Integration stage, usually too late to save the project from major cost and time overruns.

Very low cost, high quality, fast implementation, low risk, client satisfaction. These are strong motivational factors for implementing Enterprise Focused Development.

Applied to all federal and state government, Enterprise Focused Development will save hundreds of millions of dollars directly, and additional savings will be gained because higher quality systems require much less maintenance and much less frequent rewriting. Enterprise Focused Development savings over the years would amount to billions of dollars.

Here is an additional EFD benefit: Many failed projects should have never been conceived because they were based on flawed logic. Enterprise Focused Development identifies and stops these problems much earlier than waterfall methods, saving taxpayers money that would have been spent completing them and then finding out they had no value. Example: In the 1980's a major and badly conceived project was ordered by one of the deans at a university I worked at. He overruled me and the CIO, demanding that the urgent project go forward. To address this problem, I didn't put any work into the project except to build a data entry module for the main table. Next I explained that stage two of the project was for the dean to begin data entry. Somehow he and his staff never had time for data entry on this urgent project and a few months later acknowledged that the project should be scrapped. Had I not involved him in the project design and testing, many hours would have been wasted completing a worthless project. Enterprise Focused Development not only flushes out problems in valid projects, it also flushes out valueless projects that somehow make it too far into the project queue. Enterprise Focused Development is continuous reality-based vetting that outperforms all other methodologies in saving taxpayers' money.

| Categorized by cost, there are three types of IT projects that can benefit by Enterprise Focused Development: Assumption: Projects with capital outlay over $500,000 require independent project management and reporting. 1. Projects reducible to under $500,000. Projects over $500,000 have additional reporting and oversight requirements that greatly increase cost. Enterprise Focused Development can reduce expenses so effectively that some projects that would have exceeded the $500,000 threshold now drop below it. If project cost can be reduced to under $500,000, then those additional oversight and reporting costs will not exist. Projects that have reduced costs enough to avoid independent project oversight, reporting, and other mandated overhead, will clearly demonstrate cost savings that can be accurately predicted. 2. Over $500,000. Very large cost savings are possible due to the principle that the larger the project is, the more likely Enterprise Focused Development is to out perform other methodologies. Time, scope, budget, risk and customer satisfaction are very likely to be fulfilled with Enterprise Focused Development. 3. Under $500,000. The smaller the project, the more likely it is that all project methodologies will succeed. Savings will still occur with Enterprise Focused Development in all of the project management areas: time, budget, scope, quality, and client satisfaction, risk. Enterprise Focused Development will outperform other methodologies, but will be more valuable in the area of client satisfaction than in budget, scope and time areas. The sheer number small projects have potential for great costs savings through Enterprise Focused Development. |

REFERENCES

Interview: Jim Johnson of the Standish Group

Posted by Deborah Hartmann on Aug 25, 2006 06:55 AM

http://www.infoq.com/articles/Interview-Johnson-Standish-CHAOS

Recommendations for Implementing Enterprise Data Architecture

We have got to define table modeling as the main tool for bringing IT into alignment with government's mission. It must remain government's focus in perpetuity.

The definition of table modeling here is broad in that it involves harmonizing data with outside governmental organizations, sharing data and systems with those organizations, and bringing continuity to table analysis and table updates driven by business intelligence.

Here is an overview of how to start implementing enterprise data architecture so that table design is the primary focus.

1. Appoint someone to be a data architect for each organization. They must be highly skilled in data modeling and understand how to transform business requirements into data tables.

2. Whenever a table is planned, created or changes, the data architect will review it for enterprise integration opportunities. This includes potential future projects, checking external organizations for integration potential and building in business intelligence during the design phase.

3. Each time there is change, there is an opportunity to bring government's entire data one step closer to third normal form. Redundant table removal, SOA opportunities coming into focus, data harmonization and removing business rules from programming code and placing them into tables are some of the opportunities that change brings. Once business rules are out of programming code and in tables, they are de-siloed and available to be shared enterprise-wide. Legacy systems should be included because even when they are replaced, conversion will be far easier when their files are normalized. Also, maintenance headaches from these systems will be greatly reduced.

4. Following this process, IT will move towards what I call super-alignment to the organization's mission where IT can nimbly deliver new features and solve problems in the most efficient way.

Super-alignment to the organization's mission is clearly possible because E.F. Codd's invention, the relational model for database management, which is based on relational algebra, brings data to efficiency perfection using easy-to-follow rules. Simply bringing organizational data into third normal form resolves business problems throughout the organization and improves IT alignment to the organization's mission in the most powerful and cost effective way.

It is amazing that such a simple, mathematically based solution is available to solve so many problems.

Third normal form is smarter than programmers, analysts, managers and government. Implementing third normal form unthinkingly, blindly, yields more intelligent results than process oriented humans. Many times when making the slightest variance from strict normalization, I have been surprised to find that there was hidden value in the strict form and needed to go back and correct the table design.

Incrementally re-engineer systems during change opportunities

Each time there is a single integration improvement, IT removes subtle roadblocks to supporting the organization's mission. Then data silos inexorably become accessible, clients' problems disappear, maintenance problems are reduced and connectivity opportunities open up across government. This stepwise approach is a little like government being a boa constrictor when battling an enemy. Whenever its enemy breathes out, the boa's coils tighten a little more. In our case, the enemy is disintegrated data, so whenever there is change, our version of tightening our coils is to integrate the data a little more until data silos are gone and strong alignment to the organization's mission is accomplished. This incremental method is also the least expensive approach.

Each time there is change, the data architect should update an integration map of how the table needs integration, or is available for integration.

Normalization principles are immune to subjective bias so they should be easy to implement. But why then is IT in its current state? Don't IT managers have degrees in computer science and know this already? Shouldn't they have already fixed most IT business problems? Several factors impede integration.

- Programmers are rewarded for quickly finishing a project, not for looking for integration opportunities outside of their project. Programmer skill is improving regarding normalizing data within their control, but not beyond their unit or department. They are not trained to ask, "Does the next unit use the same table as I do?" These lost integration opportunities result in redundant data and processes. Managers like to show "project completed" to their supervisors. They do not encourage programmer cross-departmental cooperation because of the added complexity, lack of education or simply are not collaboratively inclined. This is the main problem and can be summarized as a lack of will power on the part of data managers to tackle data integration.

- Contractors and vendors, because they are temporary, create runaway code without enough supervision in table design, and create new databases without understanding how they could be integrated with the rest of the organization in the future. (This problem is solved here.) There is a lack of integration continuity when data systems are deployed at different times by different vendors. The lack of integration continuity causes problems such as those in the Maricopa County, AZ justice system where different agencies assigned multiple numbers to the same criminal cases causing confusion on the part of judges and attorneys.

- COTS - commercial off the shelf software is not integratable with the rest of the organization's systems because each government's core business systems are, by definition, unique.

- Budget structures do not encourage co-sponsorship of systems sharable by different government entities.

- Project managers from most schools of project management are not trained in enterprise architecture principles.

- Security concerns discourage sharing between organizations.

- Absence of Enterprise Focused Development methodologies is responsible for the lack of table improvements during system design and implementation.

One of the key things that even experienced data managers underestimate is the high degree of normalization strictness needed to keep IT in alignment with the organizational mission. Even systems that have recently been rewritten from scratch begin to disintegrate if small changes are not evaluated for integration opportunities. This leads us to a very unusual conclusion not heard often in the IT world. If enterprise data were always kept fully normalized and updated for business rule changes, no rewrites of computer systems would be necessary. Even legacy systems would eventually offer as much value as new systems using the stepwise process above, and the only reason for migrating away from them would be to save money on cheaper platforms, but their data functionality would be perfect. This underscores the enormous value of focusing government on table modeling. Add up the cost of small, medium and largest system rewrites, unnecessary maintenance, unnecessary labor and lost functionality to see the true value of keeping data models fine-tuned. It is stupendous. Government IT must remain focused on table modeling.

Integration Help Desk

A centralized help desk should be created in each state to assist all of the state's agencies in connecting data between internal and external departments.

A component of the help desk should include experts who offer SOA, security, web services and other technical help in establishing data exchanges. This would speed up connectivity implementation first identified by the data architect in each organization, and free the data architect from work that is not part of their core function, modeling data.

The following article recommends a description of the duties of a data architect, who should be the chief person focusing on modeling their organization and the whole government.

Top

Recommendations for data architect duties

Data Architect Job Description

This article offers a description of the duties of a data architect or someone filling the role of enterprise data integration manager.

This article's goal is to prioritize data modeling in the data architect job description over secondary responsibilities such as data recovery planning and other responsibilities that are appearing in data architect job descriptions.

By using data modeling methodologies, the data architect will provide energy at the points where energy is weakest in government: continuity in finding and managing collaborative opportunities, and integration between integratable but stovepiped systems so that data is re-tuned to the organization's mission on a daily basis.

The data architect absolutely must be highly skilled in modeling data. However it should not be mandatory that they be familiar with hardware or any specific company's software. The modeling duties should be paramount and non-integration specific jobs could be assigned to others.

For large organizations, it is important to not dilute the data architect role beyond data modeling simply because keeping a whole organization's data model up to date is a full time job and the data modeling role is more important than anything else. Data modeling duties cleanly separate from other duties. For example, technically setting up an XML data exchange with another agency can be assigned to a programmer. But there must be a data architect there to identify the opportunity and recommend the exchange in the first place. Likewise, a DBA can replicate data or set up secure FTP processes to logically integrate data, but the responsibility for an enterprise-wide integration plan should remain within the data architect's duties.

Core function of the data architect

1. Model the data of the whole organization so that it's in 3rd normal form. Independent of where the data is stored or the processes that use it, this new view of the data reveals: (a) redundancy in the organization (b) where incompatibilities should be remedied (c) opportunities for sharing databases and services internally and externally (d) unanticipated capabilities.

2. Change the organization's data systems to mirror the model. This aligns the organization's resources to the organization's mission more efficiently than any other method.

Detailed data architect duties

To get started, data architects should implement a single source of contact for integration issues for each government organization by using a standardized naming convention for an email account that goes to the data architect's office. E.g., enterprise_integration@whitehouse.gov, enterprise_integration@omb.gov, enterprise_integration@ dmv.ca.gov, etc. Everyone in the data architect's team would receive a copy of the email so that backups respond to emails during individuals' vacations. The address is just a standard contact name for each organization. Then any data architect or anyone requiring integration information can contact data architects from any other organization, federal or state, without needing to find a mailing list. The mailing list wouldn't need to be updated because the address would be permanent. Everything, including email address names should be designed to encourage collaboration by default.

The data architect's checklist, summarizes many of the duties below.

The data architect must get into the conception and planning loop in all organization venues early and analyze planned computer systems and data flows for all departments to make sure that there is no redundancy, poor design, and field misnaming. Ensure that all tables are in 3rd normal form. Analyze each new IT project with an eye towards maximum potential for enterprise-wide and government-wide integration. Experience has shown me that the data architect should not make the project design wait until they have approved it. That will turn the data architect into a bottleneck. The data architect should leave the designers free to do what they want, but insist that they notify him immediately even when only a new table is designed; so the data architect can study it the same day that it was planned. A worst-case scenario would only require the designer to backtrack a few days work if the data architect found problems. This table analysis role would save the government the most money because it would prevent expensive problems from being permanently locked into systems until they were replaced, perhaps 10 or even 30 years later.

Data harmonization and project management - review data fields so that the same field does not have different names for the same record throughout the enterprise. Promote standard vocabulary enterprise wide, for example, "Distributed Cost" is called "Cost Allocation" elsewhere. Promote project management discipline where possible using project management terms to help spread the project management discipline throughout the enterprise. Train and collaborate with project managers so that project management methods include enterprise architecture.

The data architect should promote regular business intelligence meetings with agency representatives, attend executive business goals and strategy meetings, and be an energizing force to align IT with the organization's mission.

Create a long-term, enterprise-wide plan to integrate all business systems and workflows for the whole organization, including the prioritized detailed steps and budget for the plan. Publish a yearly report of the plan's status. Publish high-level data relationship charts. Maintain centralized documentation of the integration process so that there is integration continuity. Build a database of the organization's databases and document fields that could potentially be shared enterprise wide, and also shared with other government entities, preferably added to a Data Reference Model system. The data architect should learn what all of the organization's systems do and start to model the whole organization. The big picture begins to form as opportunities are mapped out. There should be a comment area for each field where fixes or other issues can be documented.

Actively look for collaborative enterprise-wide and government-wide opportunities and watch for potential conflicts with other such systems. NIEM, the National Information Exchange Model, should be explored as the schema sharing tool for state and federal government. They have training classes, a help desk and analysts to check that submitted schemas for sharable data meet standards. Identify SOA opportunities, internally and externally. Suggest new SOA opportunities that don't exit.

Co-produce an expanded library of best practices of integration management with the data architect community. Coordinate with the top government CIO.

Centralize documentation on what design changes need to be made to convert poorly functioning existing systems. The reality is that there often isn't enough money to replace a poorly functioning system. But the data architect should have the specs ready so that the replacement system is already fully planned out. Clients should send ideas for the new system to the data architect on an ongoing basis instead of at the last minute when the new system is about to replace the old one. Create a database of each business unit's capabilities wish list, updated yearly and whenever there are changes, so that there is the most complete picture of the agency's goals and needs. Make sure big integration projects obtain financial oversight agency approval. Keep a record of every client request to IT, indicating whether it was turned down or not, to understand the agency better and further document any possible need for integration. Metrics: keep track of maintenance that would have been unnecessary if integration had been implemented.

Implement quality assurance to ensure that clients of shared applications receive good service. The purpose is to discourage client desertion where they create their own duplicate system because the shared one is not responsive to them.

Educate all employees to think from an enterprise-wide viewpoint. Create an environment where enterprise wide integration is promoted throughout all facets of government.

Change control - a data architect can minimize risk during change by virtue of their deep understanding of organizational data. For example, if a legacy system is going to be consolidated, the data architect could recommend a stepwise approach where first, the data is harmonized, then tables consolidated and finally only programming code would need to be changed so that the change to the new system no longer becomes an "all or nothing" panic-filled project.

Build trust. The data architect should listen to clients, understand their needs, be transparent so that clients understand the reasons for everything they do, and fulfill promises quickly to establish a good track record. Once trust is established, the data architect can get the cooperation needed to reshape the organization's information systems.

Participate in a worldwide forum of integration issues.

Collectively, data architects should research opportunities and advise the top federal or state CIO regarding change or design policies and legislation that promote integration, such as policy and budget structures that encourage system sharing between different organizations. They should defend enterprise integration against policies that cause fragmentation because some governmental policies may actually promote enterprise disintegration. An example is the way that financial oversight agencies exercise fiscal control over IT projects. An oversight agency may author statewide policy that tends to cancel expensive IT integration projects and instead approve smaller-budgeted add-ons to obsolete systems that were to be replaced. When this pattern is repeated over and over again, the net result is institutionalization of statewide fragmentation because only stovetop applications are approved. The short-term benefit of these types of oversight agency clampdowns is that they reduce sensationalized failures of large IT projects. However if this shortsighted policy is left to continue, the long-term waste of money and loss of functionality will vastly outweigh any short-term gains. Bigger is not always better, but data architects need to advise oversight agencies when smaller add-ons to a broken system are worse than system rewrites.

The data architect should teach a course in data modeling to all newly hired IT and business managers, systems analysts, project managers including the whole PMO department, developers and programmers so that they can learn how to fit in with the agency's overall enterprise architecture plan and learn how to work with the data architect. This will prevent problems such as when middle managers buy what seems to be a cheap COTS solution, but is in reality a stovepiped data silo that creates decades of interoperability problems. Instead of COTS, using an in-house built solution, if appropriate, would last forever because it would bring the whole enterprise one step closer to full integration.

Finally, a data architect should encourage all employees to take an interest in integration matters and send suggestions that will improve integration. An intranet website should educate employees about enterprise-wide integration. The data architect should create a culture of collaboration, and an environment where planning for information sharing is an integral part of the organization's personality.

The data architect position would in effect, guarantee that government is perpetually guided towards integration through standardized policies and procedures that prioritize data modeling. The data architect would consistently align information systems to government's mission.

->> The next article discusses a new software development methodology that should be used to implement the data architect's integration projects and all business IT projects: Enterprise Focused Development

Centralizing Government Systems

Centralizing Government Data Systems

The hidden expense:

Redundant systems in government

There's nothing new about the idea of centralizing government data systems. Who can't see that efficiencies are to be gained by not having to reinvent the wheel?

Two concepts are missing, which if implemented, will make centralization successful:

1. Client satisfaction enforced by a 3rd party

2. Policy to mandate participation in shared government systems

There also needs to be budget mechanisms to encourage different government agencies to share the cost of centralized apps, but this issue is not discussed here.

Client satisfaction enforced by a 3rd party

Case Study #1 |

| Many years ago I was asked to design and implement a very large computer system for a state agency; a highly sharable system. I presented a solid case to cancel the project and to instead use the state's centralized system already in place. That is where I learned the fundamental truth about shared applications. If clients do not get good functionality, they will bail out of the shared system and get their own faster than you can blink an eye. I've rarely seen such dislike of an IT system. The client asked me to reject the centralized app. So I thought "then I'll just look for some other state agency's system and share that app with my agency". I called agencies that had had their own apps, but the system requirements were so complex that they had to abandon theirs and use the state's centralized system. During my conversations with the other agencies, I saw the same, unhappy attitude about the centralized system and anger about being forced to use it. The strong emotions just poured over the phone line into my ears. |

What was missing was an auditing organization sitting on the outside, having the authority to force the managers of the shared application to fix their system. For state entities, the best authority would be the state CIO.

Why an outside authority? Because this is a repeating pattern where the problem needs to be adjudicated by a specialized group that uses standards and methodologies more efficient than an agreement made between the clients and providers of services. It is difficult for the shared app provider to police itself. Clients and centralized app managers need a referee.

How much did the above problem cost? One agency paid $500,000 to a private company to create their own system, but that system failed. Several other agencies had their own staff write their systems, but they all failed also. I used two years of taxpayer money writing it. The clients were very happy that my system gave them the functionality they were looking for but the best way to solve the problem would have been for the shared app to be fixed.

How often does this happen? There is a tension between the shared application provider and clients in almost every case, however the cause of serious problems is a lack of imagination on the part of the centralized application provider, who cannot grasp the adverse effect that their bad service is having on the client. This unfortunately, is often the case.

What should the outside organization do? Survey clients methodically, make recommendations, mandate solutions if appropriate, and keep clients from jumping ship and creating their own applications at tremendous cost to taxpayers. The organization's scope should be government-wide. If the system is not shared with outside government organizations, then only the CEI would need to ensure quality control.

With this organizational change, there would be a responsible entity discovering there was a problem. In the example above, no responsible entity knew there was a problem and no one reported it anywhere.

Policy to mandate participation in shared government systems

Case Study #2 |

| Take a typical state agency. Each department within the agency head runs their turf the way they like. When a new employee gets hired, where do they go first? Personnel. As the new employee incorporates himself into the agency, it becomes evident that unless the agency's systems are all integrated, there is much redundant work. The worst case is when the employee leaves the agency and their passwords from the outside still work because the personnel department did not notify all affected parts of the agency. Often, the IT department asks personnel to let them create an integrated system so that when a new employee first comes on board, or quits the agency, their information radiates out to the correct people throughout the rest of the agency automatically. Can IT get the personnel department's cooperation? No, because they don't want the responsibility of entering or maintaining the information. Can IT force personnel to change? No. Should government be in a position where IT has to persuade the department to voluntarily integrate? No. |

What is missing is our outside integration entity described above, or the organization's empowered CEI, to break the stalemate and have the authority to mandate integration.

The CEI or outside authority will have the experience, knowledge, standards, methodologies and skill to determine if the changes should take place. This will bring about government integration a lot faster than depending upon the local parties to voluntarily work together. Some managers put their own ambitions ahead of the enterprise-wide mission, making voluntary integration impossible.

First, government needs have the authority to make sure clients can get the quality of service they need from shared apps. Next, it needs to have the authority to require that they adopt enterprise-wide integration. This means organization change: No clout...no integration.

Case Study #3 |

| Some years ago there were a few state entities that did not yet have a web presence. I tried to persuade one reluctant agency by creating a sample web site for them in my spare time. Other agencies had their own web sites but refused to listen to my recommendations to put their email address on their sites. The agencies' resistance to consumer access mystified me. Then due to an order from the governor, all agencies in quick succession had a web presence, email contact info and an online location where consumers could file complaints against the agencies. |

The above example shows that in some circumstances centralized control is the best solution. An organization must be created to systematically direct change in government where integration creates efficiency. Methodically, using standards and best practices instead of the informal way that the problem was discovered in the case study above. The eventual compliance by the state entities made a world of difference to consumers previously blocked from state government.

1. Identifying redundant systems and planning to replace them with centralized ones is a beginning, but not enough. Clients' drive to have good, functional systems is greater than their concern for saving money. They can, and will often find a way to circumvent a shared application to get their own system when the manager of the shared application does not listen to their needs. Do you like giving up control or waiting for some external entity to do time sensitive work for you when your own people can do it faster and better? An outside organization needs to have the authority to make shared application managers listen so that they will gain the trust of clients giving up their own systems.

2. Managers of non-IT departments are often slow or reluctant to assist in the big picture of enterprise-wide data flows. A CEI or outside organization needs to have the authority to integrate an entire organization and mandate the cooperation of key departments. However without quality control through a third party, this is too heavy-handed. The third party needs both the authority to make service acceptable to the client, and the authority to require the client to adopt the centralized system.

Even a cursory look at state CIOs' or federal OMB's web sites points out to us that centralization is coming. The above suggestions, including the creation of an empowered CEI to implement them, will advance successful centralization.

TopReturn on Investment Chart

Projected savings from integrating government. Estimates are general and not based on detailed work breakdown structure.

| Integration activities generating ROI | Percent savings ongoing yearly ROI | New functionality created by integration activity |

| Rigorous table normalization and expert table design | 40% less maintenance, redundancy and more reliability | 30% |

| Cross agency integration and centralization of applications and processes | 30% less human work | 15% |

| Internal agency integration - combining applications, centralizing applications and processes | 25% less human work | 18% |

| Project management best practices support | 2% less human work. Fewer stove piped projects. More interagency collaboration. Change control. | 1% |

| Enterprise Focused Development methodology: used on all business IT projects | 80% of total IT project budget - one-time savings | 32% |

Discussion Ideas for Mitigating Risk During Software and Hardware Updates

Risk Management

Introduction

There are many opportunities to minimize risk in software implementation and hardware installation. The role of risk mitigation becomes more important the larger the project is, or the more impact it has. This paper explores methods to minimize risk and recommends establishing a Risk Management Policy.

Scope

Risk herein pertains to risk during software migration to production or hardware installation. Related methodologies are PMBOK (which defines traditional risk management) and software development methodology, SDLC (such as Agile, Waterfall, Spiral, etc.). This paper addresses specialized migration planning concerns beyond PMBOK and SDLC and does not supersede them.

Stakeholder list and policy impact

Stakeholders: IT, PMO, business clients, change control board, ITIL

Impact to current policy:

- Change Control Board

- Project Management planning guide

- SDLC

Methodology

The general methodology involves configuring project time schedules to separate out project components in a way that optimizes vetting in a stepwise manner.

Example: A component is being added to the mainframe that consists of a new table and new programming code. Instead of moving them to production at the same time for client use after testing in the test environment, move the table to production first and test it alone for a day or less without the programming code (test with ad hoc queries and joins that only programmers can see). This may unearth a host of potential problems such as permissions authorization, size, data-type constraints, compatibility with the OS, etc., but will not interfere with clients using the rest of the system. All of the above problems could have appeared on production-implementation day, throwing the implementation schedule off and causing clients to wait in standby mode during their scheduled testing time. With this methodology, these problems are already out of the way before implementation day.

The idea is to break a project down to the highest number of components that have vetting value and test them early during non-critical times, including migrations to production, to reduce risk as much as possible. Analysis is required to:

- Determine how a component can be broken down into smaller components to reveal risk reduction opportunities. Opportunities include how a component can be designed to be independently, then collectively tested during non-critical times.

- Identify stakeholders such as downstream programmers to see if implementation design can be optimized for them.

- Plan in what order component testing and implementation should be scheduled. Determine if early testing will reveal opportunities for the rest of the implementation plans. Look for ways to bring clients into the testing process as early as possible.

- Determine how far into the production environment can the component be integrated and tested without incurring risk. The further the integration into production, the better.

- Design implementation so that each change can be backed out to the original system for all project teams at any stage.

- Design testing so that downstream programmers receive daily, real and full production data during early project stages.

What is new in this methodology:

- Treatment of the production environment as an extension of the testing environment.

- Identifying migration of groups of components to production concurrently as a risk

- Programmer teams have a policy mechanism for collaborating with other programmer teams

- A formal risk management document added to programmer teams' SDLC

- Project Management Office has a formal risk management document added to its operation procedures

- Migration and installation planning begins during project conception so that stakeholders can become involved as early as possible.

Treatment of the production environment as an extension of the testing environment is important because production may have patches, data, security and other things in its environment that the test environment doesn't, that can affect the success of migrated components that worked correctly in the test environment.

Early stakeholder identification is important. For example, suppose Team A plans a major change that affects Team B. If Team B is brought in early in the conception stage, they may be able to recommend a project design that avoids funneling all change implementation into one instant switchover from the old to the new system. Otherwise, Team B loses control of their time schedule and is compelled to migrate everything into production at once with a very small tolerance window for errors. The goal is to avoid introducing unnecessary errors and staff stress by intelligently configuring implementation time lines. This will produce a much wider tolerance window. Another example, if a field length is increased for Team A, Team B may be able to increase their field length early and fully test in the test environment, then production environment, because additional field length testing doesn't require that actual Team A data be in production yet. This can remove substantial pressure off of Team B because the amount of time they have to implement has been stretched out by good design planning early on.

Below is an example of identifying the Legal Department as a stakeholder and bringing them into implementation planning early.

Example 3: Assume that two regulatory agencies, Board A and Board B, will be merged into one agency. Here is a broad outline of possible risk management steps (besides standard PMBOK):

- Harmonize data

- Centralize and consolidate data

- Switch-enable programming code

Harmonization could include:

- Legal Department reviewing laws, terms, and definitions that the agencies share that might have conflicting meanings needing resolution. E.g., (a) The same phrase might have a different meaning for each agency or (b) each agency could have different legal terms for the same legal meaning. These must be worked out before any coding begins.

- Status code harmonization to make sure that status codes of each agency represent the same thing.

Centralizing data could include: Replacing both agencies' tables with a single centralized table before programming code is changed so that problems are isolated to data, identified early, and not combined with other programming problems on implementation day.

Switch-enabling programming code could include: Writing programming code in a way that allows concurrent implementation of both new and old systems in production. The choice of which one runs, or if both run simultaneously, can be determined by a conditional, programmatic switch based on a "yes", "no" or "both" value in a data field. If, for example, there are many users, this provides a good back-out method to revert to the old system with minimal down time.

In the above example, component vetting in production has been spread out to non-critical times. Had everything been migrated into production at one time, many problems occurring at the same time would make implementation much more complicated.

The above is only a general description. The data centralization step might be omitted so that the entire implementation would involve concurrent systems. Case by case analysis is required to determine variables such as: "Is redundant data entry required to implement two concurrent systems?"

Risk Management Policy

To bring about implementation-design collaboration of different teams, it is recommended that a Risk Management Policy be established.

The policy would be a simple memorandum requiring that project teams meet with appropriate stakeholders, including other teams, to plan risk management using the checklist in Appendix A as a guide. Policy compliance would be established by:

- Adding the methodology to programmers' SDLC

- Adding the methodology to Project Management procedures

- Adding the methodology to the Change Control Procedures.

Implementation plan

The risk management policy and methodology themselves should be implemented in a stepwise manner with prototype testing before mandatory use.

Performance Measurement and Process Improvement

Each risk management effort would be measured by a follow-up survey of stakeholders affected by the methodology (Appendix B). Use history and recommendations to improve the methodology or policy would be recorded. Recommendations would be logged onto the risk management forum.

Responsible parties

- PMO: Improve risk management methodology and policy.

- PMO: Prototype testing and moderating risk management forum discussions.

- CIO: Resolves policy disagreements.

- Project managers: Risk management policy compliance.

- Quality Management Team: Conducts follow-up surveys (Appendix B).

Future Expansion And Enterprise Architecture Considerations

Analysis after implementation would indicate if there is value to further expansion of risk management policy. The policy is currently written with the narrowest scope, but the idea can appllied across many business processes.

1. The project scope can remain narrow, limited to software and hardware installation.

2. Risk management policy can be expanded to a broader policy overlapping PMBOK and SDLC areas.

3. The policy can be expanded to all areas because risk management methods can benefit many other areas and disciplines.

4. A Risk Management Team can be established to enhance all business units. The team can be added to the enterprise architecture process. In other words, the Risk Management Team would have an enterprise role in the same way that a data architect has.

Conclusion

The goal of the methodology is to implement change in a way that no longer becomes an "all or nothing" panic-filled project requiring hundreds of components and variables to work in perfection at implementation time. The premise is that there are many hidden opportunities to reduce risk that are revealed when this methodology is used.

Except for early stakeholder notification, these recommendations should be tailored to the individual project without rigid compliance when common sense dictates that low return on investment would result.

Designing for implementation will bring benefits of less downtime, greater client satisfaction and will simplify troubleshooting on complex hardware installations and software updates. Much of this can be achieved by simply rearranging the order that components are introduced into production and tested.

As an additional benefit, over time, each team could establish a body of best practices, some of which would result from use of these guidelines. Much of this methodology can apply to any business undertaking and should be freely borrowed.

Appendix A

Risk Management Checklist

- Project name

- Project manager

- Stakeholder list and date they were notified of project conception

- Stakeholders were surveyed regarding their risk priorities

- Project has been analyzed for risk mitigation opportunities

- Project has been designed to give stakeholders daily, real and complete production data for testing purposes in the test environment early as possible

- Project has been designed to give all programming teams opportunity to flexibly back out all the way to the old system at any stage of the project

- Analysis has been made to see if early testing will reveal opportunities for the rest of the implementation plans. If "yes", project implementation has been designed to optimize early testing of components by clients

- Project implementation has been designed to individually integrate as many components into production as possible without affecting current production operations

- Risk management survey has been completed or scheduled for relevant stakeholder

Appendix B

Risk Management Survey

- Name and role in the project: e.g., client, stakeholder, team member, etc.

- Project name

- Date

- Which risk management checklist items (Appendix A) were applied and not applied? For the ones that applied, were they useful? Why or why not?

- Was the risk management goal clearly communicated?

- Was the original notification of the risk management process communicated to you in a timely manner?

- Were you kept informed of risk related developments related to you in a timely and manner and were the communications clear, including the reasons for them?

- Did you receive timely and clear replies to your questions?

- Recommendations

CEI - Chief of Enterprise Integration

A new piece to solve the IT maturation puzzle: Building systems integration into government organizational structure

The purpose of this article is to recommend to federal and state government the formation of a new organizational personnel structure to guide the growth and maintenance of its business data systems.

A new position would be created to modernize IT job classification. The person in that role would bring a permanent focus to integrating all business data systems in government to more closely align IT to the government's mission.

For convenience of discussion and to emphasize the scope of the responsibilities, I've suggested the title of Chief of Enterprise Integration, CEI.

Governments are clarifying IT hardware consolidation, and ITIL is improving many areas of client services. What remains is to better coordinate business data integration. Who will guide the enormous growth of complexity? Left alone, IT managers will adapt government business systems at extra cost and a slow centralization learning curve. To address this challenge in a more efficient way, an organizational change is described here that will accelerate the integration of business systems.

There are major differences between this new position and the CIO, enterprise architects, systems integrators and data managers. The CEI would be similar to a data architect but would have authority equal to the CEO regarding data integration in order to be able to mandate renitent areas of government to integrate and re-engineer their systems. This new position would solve the problem of slow and unfocused voluntary collaboration between government organizations.

I first conceived of the Chief of Enterprise Integration idea during FBI Director Robert Muller's testimony before congress regarding lack of intelligence database connectivity after the 9/11 terrorist attacks on the World Trade Center in NYC. The FBI had the same data integration failings as every other government and private organization I had worked for. There needs to be a person of such high authority that they can optimize data configurations in every area of government without having progress impeded by bureaucracy. Not to have such a position weakens government's ability to respond to its citizens' needs.

The CEI's key responsibility would be to ensure that their organization works towards enterprise-wide data integration on an ongoing basis. To ensue that there is cooperation between unwilling organizational units, the CEI should have greater authority than all other positions except for the highest executive officer. A Chief of Enterprise Integration position needs to be created within every government organization.

A CEI would coordinate data integration in each organization, and all CEIs in turn would follow policies set by a top CIO, for example the state CIO. State or federal institutions would have integration managers dedicated to promoting efficiency within their organizations, but also have defined partners in the rest of government researching opportunities to cooperate with them. The objective is to ensure that all government organizations are working towards integration internally and externally with CEIs as strategic planners. This will align IT to government's mission more effectively than any other method.

The CEI's work would involve data integration processes described in the Federal Enterprise Architecture Framework (FEAF), but the CEI would add integration continuity, authority and define who the community of integrators are throughout government. The CEI helps define the people who use the tools and methods described in FEAF.

The CEI position would eliminate these problems: (1) large, unnecessary maintenance costs associated with poor database design (2) rewrites of large systems (3) poor functionality of systems (4) unnecessary human business procedures (5) redundant files and computer systems (6) incompatible systems.

Benefits that the CEI position would bring: (1) new features not envisioned by government organizations or the public as a result of optimal data configuration (2) greater efficiencies in government and more productivity (3) more collaboration between government entities (4) cost savings (5) institutional flexibility, ability to adapt to change (6) more time for computer programmers to add capabilities because many new capabilities would only require simple joins with far less coding.

CEI responsibilities

The main goal of the CEI is to use data integration to align IT to the mission of the organization.

CEI responsibilities would include those defined in the Federal Enterprise Architecture, and I have listed some additional ones:

1. Create a long-term, enterprise-wide plan to integrate all business systems, including the prioritized detailed steps for the plan. Publish a yearly report of the plan's status.

2. Analyze all planned computer systems and data flows for all departments in the organization to make sure that there is no redundancy, poor design, and field misnaming. Ensure that all tables are in 3rd normal form. Analyze each new IT project with an eye towards enterprise-wide and government-wide integration.

3. Look for collaborative enterprise-wide and government-wide opportunities and watch for potential conflicts with other such systems. Co produce an expanded library of best practices of integration management with the CEI community. Coordinate with the top government CIO.

4. Publish high-level data relationship charts. Maintain centralized documentation of the integration process so that there is integration continuity.

5. Modify the workflow and data systems of other departments in the organization to advance enterprise-wide integration. To do this, the CEI's authority for system change would supersede other authorities except for the CEO.

6. Ensure that clients of shared applications receive good service.

7. Educate all employees to think from an enterprise-wide viewpoint.

8. Create an environment where enterprise wide integration is promoted throughout all facets of government. This includes working collectively with other CEIs to recommend establishing or changing legislation, and formulating budget and operating policies for the whole government.

The CEI position would in effect, guarantee that government is perpetually guided towards integration through standardized policies and procedures. The CEI would consistently align information systems to government's mission.

Even though formal CEI classification does not yet exist, the core duties can, and should be assigned to an existing employee. Then government can immediately gain many of the benefits of the CEI, such as a dedicated position to avert system fragmentation at the design stage of projects, recommendations for well planned IT growth, focused collaboration with outside entities.

The difference between the proposed classification, and the informal position which can be implemented immediately, is the authority to mandate other departments in the organization to implement integration. The problem of not having this authority is particularly important, and is described further in this and related articles on this web site. The empowered CEI job may appear to be abstract, but it is not. The lack of authority to mandate cross-departmental integration is so determining, that the 911 Commission specifically identified it as one of the factors that weakened U.S. intelligence before the 911 disaster. The 911 Commission states that: Only presidential leadership can develop government-wide concepts and standards. Well-meaning agency officials [..] may only be able to modernize the stovepipes, not replace them. Currently, no one is doing this job. The purpose of this article is to legislate the CEI to be the person doing that job.

The goal is to build collaboration into government organizational structure.

Until this classification is formally created, the recommendations found here can be implemented by any government or for that matter, non-government IT department. Success depends upon how good the systems integrator is at encouraging voluntary cooperation. Integration would still happen but it would happen slower.

For convenience of discussion, the word CEI is used to describe both the empowered CEI and the position without the authority to mandate integration, except where the differences are discussed

A unique aspect of this position is that it requires an expert in relational database design. This is so that they can recognize how a field handled at the micro level, greatly affects the functionally and connectivity of the whole organization at the macro level. The experienced relational database designer could identify hidden future integration potential in a table design that would otherwise be missed by programmers and project managers. The database designer can see potential opportunities for new services to many people throughout the organization because knowledge of the data would no longer be locked up in a process-oriented system.

There already is a person in charge of data design for each individual project (the DBA/designer/project manager) similar to what I'm describing, but when that project is completed, they are no longer involved in keeping abreast of new enterprise-wide system integration matters, and a new person must learn it all over again for the next project. For large organizations, where is the integration glue that formally connects different sections of the same organization? Is there only informal cooperation with few standards? Does the organization experience system fragmentation when a manager not savvy about data design purchases a new application without knowing how it should fit in with the rest of the organization?

IT is not working optimally. Licensing applications are often built as separate systems so that in one case, the applicant tracking program could not fully integrate with the licensing application because of the lack of a uniform identification number. The significance of this field would be caught by the CEI in the design phase, nipping any incompatibility problems in the bud. Since it wasn't caught, the problems that this caused one agency lasted for years. The lack of a centralized employee database with connectivity to the personnel department is another example. The unnecessary maintenance costs resulting from these oversights have far exceeded the original cost of the systems.

The way that problems occur is that programmers, frequently contractors, because they are temporary, create runaway code with not enough supervision in table design, and create new databases without understanding how they could be integrated with the rest of the organization in the future. There is a lack of integration continuity when data systems are deployed at different times by different managers. The lack of integration continuity causes problems such as those in the Maricopa County, AZ justice system where different agencies assigned multiple numbers to the same criminal cases causing confusion on the part of judges and attorneys. A CEI would prevent these situations and maintain integration continuity. (Jailhouse Talk - Case Files - Integration Initiatives - CIO Magazine Mar 1,2003 http://www.cio.com/article/31738/

Integration_Initiative_for_Maricopa_County_Law_Enforcement)

Many problems would have been avoided if there had been a Chief of Enterprise Integration position when the large systems of the 1990s were created. This is the one concept that has been missing from many government organizations. It would greatly and permanently enhance how all of the organization's components work together. It would help establish a common data vocabulary for the organization's sections, for example by standardizing the fiscal department's chart of accounts.

The CEI would analyze each new project down to the table design level before it's implemented and study how it could be used regarding enterprise-wide interrelationships. Since that person would know about most tables in the organization, they would develop a deep knowledge of all of the organization's data processes and be able to maintain a constant overview of the changing data landscape, making it easier to discover new opportunities, avoid conflicts in the future, and avoid having a database paint itself into a corner with no path to future connectivity such as in the Maricopa County example above.

There may already be some efforts towards coordinating work between different sections of an organization, but this is different in that the person responsible for enterprise integration would have to be experienced in relational database design and be a permanent coordinator of change in the organization to bring energy and focus to the integration process.

The establishment of the CEI position will strengthen an organization in two ways: (1) It will separate out the job of data analysis from all other IT functions such as security, hardware, etc. so that there will be complete focus on information integration being aligned to government's mission. The organization's core mission is reflected not in the hardware but in the information, which should be organized for optimum productivity. Organize the information first, then hardware, security, workflows, etc. can be better planned. (2) Secondly, the position removes the weakness of being able to see the big picture but not having the authority to make all departments work together. A good example of a position with authority to modify all departments is the Information Security Officer in California state government (ISO). The ISO orders everyone to set up methods to shred Social Security numbers that get thrown in the trash, and it gets done faster than if the ISO did not have that authority.

A new organizational structure is needed. Technical innovation cannot solve the problem that will be handled by the CEI. What is needed is an organizational change. As government business systems grow in complexity, a specialized integration manager is required to guide the growth of these systems.

Duties

Description of CEI responsibilities

A broad description of CEI functions was outlined in the previous article, including the equivalence of FEA (Federal Enterprise Architecture) processes. Here are some different perspectives on the same functions based on my experience.

Core function of the CEI

1. Model the data of the whole organization so that it's in 3rd normal form.

Independent of where the data is stored, or the processes that use it, this new view of the data reveals: (a) redundancy in the organization (b) where incompatibilities should be remedied (c) opportunities for sharing internally and externally (d) unanticipated capabilities

2. Change the organization's data systems to mirror the model.

This aligns the organization's resources to the organization's mission more efficiently than any other method.

Example: Police departments have a database of recent crimes. The parole board has a database of parolees. Before, the two systems did not interact. The CEI sees that a crime category field exists in both systems. The CEI normalizes the data and unlocks a new benefit where the police can now download data on all recently released parolees that have previously committed the relevant crime and have an instant list of suspects.

In case you missed the main point because it went by too fast, here it is again. Would you on your own, likely connect the fact that the police needed the specific information that the parole board had as quickly and easily as seeing that the same field was in both databases? That is the power of data modeling. The most productive possible solutions come from a formula that unlocks process oriented systems, allowing you to match fields. Simple faith in normalizing the data will always produce brilliant results without errors or exceptions. That sounds a little grand, but that is the practical result of every day use of 3rd normal form. Less programming, faster project completion, less maintenance, fewer mistakes, more flexibility for the organization, more opportunities. There is an example on centralizing government systems where I was able to complete the identical project that teams from many state agencies and a private company could not. Centralizing Government Systems - The state agency that was responsible for the centralized application had six programmers working on it for 20 years and was not able to fully automate it, while mine was completed and fully automated in 2 years, and I was the only programmer. How did I do that when I only had obsolete mainframe technology to work with? By following the normalization rules more rigorously than the other designers.

Now multiply the single field justice system example above by all the other fields of government to get a sense of the how the CEI increases productivity.

Based on relational algebra, there is no ambiguity or subjectivity: the 3rd normal form concept is the most productive tool that organizational integrators have.

Process oriented managers on the other hand tend to think of systems as cars. When one gets old, they throw it away and get new one. But data is not a stand-alone object, so process oriented systems end up costing more in maintenance than the original system because the data is not integrated into the organization. This is the reason why CEIs are the most qualified planners of government data systems.

Working with outside CEIs

Integration partners built into the classification structure

How would collaboration work with outside CEIs? The CEI community would create a centralized database of potentially sharable databases and business processes, and use a secure intranet to open best practices and cross-jurisdictional integration related group discussions.

Let's say they found a candidate with good ROI in centralizing county government court document handling systems, and the state CIO was in charge of the project. The first advantage that becomes evident is that there are are no delays in determining who all the contacts are throughout the state: the county CEIs. One email would go to the all the right people.

Some jurisdictions have their own custom document handling systems, and some jurisdictions handle documents manually. This demonstrates a second advantage: there are fewer delays in understanding the data organization of each different county and how to best integrate the new system into each county because the CEI is already familiar with them. CEIs can best advise designers on local situations to make specs conform to the counties' mission thereby producing a more robust application. The system would be designed with future integration potential in mind.

Even at this early stage it is clear that institutional change is an orderly process. Without the CEIs, the centralization project would act as a disruption to other managers who did not have the tools and methodologies for this field. Long delays would arise as the project percolated through the old school project management system. With CEIs, managing change is what is normal and it is handled in a scientific way using best practices and effective tools. CEIs accelerate integration and generate higher quality work.

The best suited people are already in place at each site, experienced at working together, as a result of modernizing the classification system.

The combination of innovation, cross-agency consolidation, and the CEIs' integration plans for all government agencies is the IT blueprint for government-wide integration. CEIs keep the plan on track by providing internal and external coordination as the data landscape changes. Aligning databases to government's mission is a continuum process. CEIs are able to do this because they are in the loop at each organization whenever data designs change. CEIs are able to make ongoing suggestions for government consolidations from the best possible vantage point. They can most effectively handle the challenge that government centralization will not be a one time event.

Details of some CEI functions